The Enterprise Gateway for AI Agents and AI Workloads

Unify every call your agents make to APIs, LLMs and MCPs in a single, secure gateway. Govern traffic, costs and model selection with enterprise‑grade control.

Production‑Grade Infrastructure for AI & API Workloads

Observability

See every API and model call in one place, including agent‑driven requests.

Governance

Set limits, priorities and fallbacks to control how agents consume APIs, LLMs and MCP resources.

Scale

Built for enterprise scale: handle surges while keeping latency low and error rates down.

Govern Every Layer of Your AI Workload

Lunar.dev unifies control over every outbound call your agents or AI application makes — whether it’s to LLMs, third‑party APIs or MCP‑enabled tools. With comprehensive governance and observability built in, you can optimize costs, enforce policies and keep your AI workloads resilient.

Control & optimize calls to LLMs

Full Observability

Track every LLM call with visibility into token usage, cost, latency and errors

Production‑Grade Infrastructure

Self‑hosted clusters with minimal latency and high throughput let you scale AI workloads confidently

Smart Routing & Fallback

Load‑balance across providers and define fallback flows to ensure uninterrupted service

Securely govern access to tools and APIs

Centralized Tool Registry

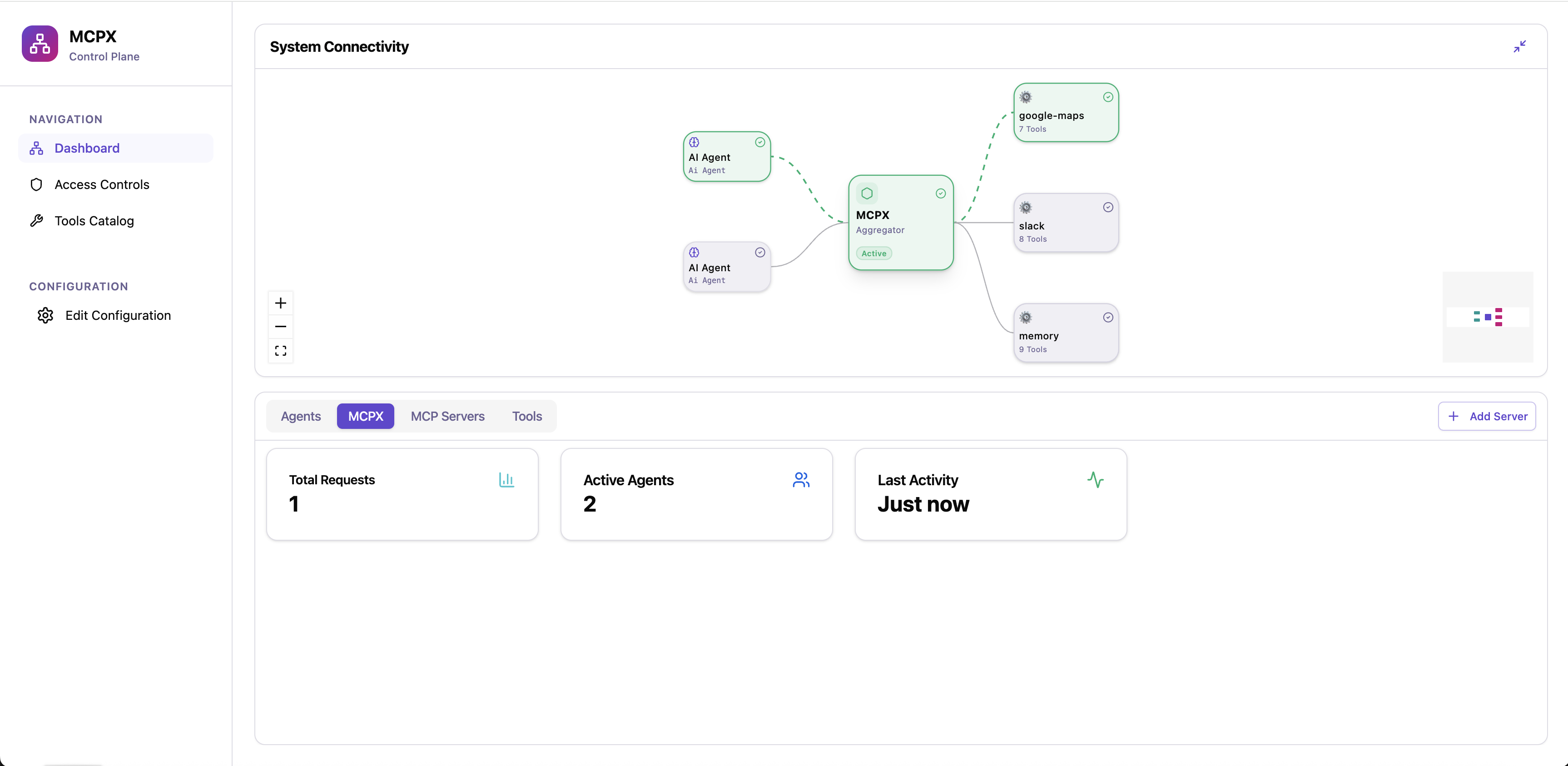

Consolidate multiple MCP servers into a single gateway, making it easy to discover and manage tools.

Fine‑Grained Access Controls

Define permissions for agents and users so only authorized actions can be performed.

Auditing & Enterprise Visibility

Set budget thresholds to manage costs and optimize API usage within financial limits.

Comprehensive Stack Governance for Your Agents

Single Control Plane

Orchestrate every call, LLMs, APIs and tools, from one gateway, eliminating silos and simplifying architecture.

Cross‑Agent Coordination

Manage interactions between multiple agents or microservices; enforce priorities, quotas and budgets across all channels.

Holistic Observability & Resilience

Combine metrics and auditing across LLMs, MCP tools and APIs to get a full picture of performance; apply global fallback rules to maintain uptime.

Measurable impact

Measure the improvement of your API and AI consumption, costs, and errors.

%201.png)

Improvement in rate limit utilization by proactively managing quotas and enforcing client-side rate limiting.

Case studyOf companies spend more time fixing APIs rather than building new features.

Case studyReduction in API dependency downtime by implementing fallback mechanisms

Case studyOf companies report that third-party API related issues require weekly attention

Report

Fault-Tolerant Infrastructure for Governing AI Across the Enterprise

As AI tools and co-pilots spread across teams, organizations face the challenge of governing and monitoring how every user interacts with LLMs, MCP tools and APIs. Lunar.dev provides the infrastructure to enforce policies, maintain visibility and ensure uninterrupted performance - even at enterprise scale and under heavy traffic peaks.

Deploy Across the Enterprise

Roll out Lunar.dev anywhere your AI agents and co-pilots run, from developer sandboxes to production clusters, while maintaining unified governance across all users and teams.

Fail-Safe Governance by Design

Built with a gateway

Built-in pass-through, fallback and re-routing ensure requests to LLMs, MCP tools or APIs succeed under policy guardrails, even if individual services fail.Scalable Gateway Clusters for AI Agents

Handle surges in requests from hundreds or thousands of enterprise users without sacrificing latency or control.

Infrastructure & Integration Agnostic

Works in any cloud or on-prem environment, connecting seamlessly to your LLM providers, MCP servers and existing API ecosystem.

Low-Latency Policy Enforcement

Apply access rules, quotas and routing logic with only milliseconds of overhead.

Enterprise-Wide Visibility & Auditing

Monitor and log every call made by any user’s agent to any tool or API, giving security and compliance teams full oversight.

Governance Policies for AI & Co‑Pilots

Simplify governance of AI workloads with Lunar.dev Flows. You can cap requests, set task priorities, manage access, monitor usage, retry failures, and route across providers, all ensuring efficient, compliant, resilient agents and applications.

Rate Limiting

Prevent over-consumption and stay within provider quotas by capping request rates per user, team, agent or endpoint.

Priority Queue

Ensure high-value or mission-critical agent tasks are processed first, with configurable queue tiers for different workloads.

Access Controls

Define who and what can access specific LLMs, MCP tools or APIs, down to individual actions, parameters or resource scopes.

Fallback & Retries

Automatically retry failed calls and redirect to backup models or services to keep agents responsive during outages or provider degradation.

Routing Between Models

Direct traffic to the most appropriate model or service based on cost, latency, performance or policy

Your Team Should Build AI Experiences, Not Gateways.

Skip the heavy lifting:

Use built‑in rate limiting, priority queues, access controls, observability, fallback and model routing instead of building them yourself.Enterprise‑ready:

Get proven scalability, reliability and security from day one—no infrastructure team required.Stay focused on Innovation:

Let your engineers deliver AI features while Lunar.dev handles governance and performance.

Frequently asked questions

What is self-managed and what is SaaS?

You deploy the Lunar.dev gateway in your own cloud (self‑managed) so API and agent payloads never leave your environment. The optional SaaS control plane provides a hosted dashboard using only aggregated telemetry and metadata.

What’s the difference between the free version and the paid versions?

The free tier supports one gateway instance with core flows. Paid plans provide gateway clustering for high availability, more throughput and advanced flows with tailored policies.

Will my API calls experience increased latency?

The gateway adds only a few milliseconds of overhead, even at peak load—negligible compared with network latency.

For more details, you can review our comprehensive latency and benchmarking analysis and report of how we benchmark.

Is sensitive data stored by lunar.dev?

No. Payloads and API calls remain within your infrastructure. Only optional system metrics are transmitted to the SaaS dashboard.

Are there two solutions or a single one?

The MCP Gateway and Lunar AI Gateway can be deployed as standalone solutions. However, integrating them unlocks the full power of an agentic gateway. Book a demo to learn more.

Is Lunar.dev a SaaS solution?

In addition to our OSS and self-hosted version, you can request access to our beta SaaS version, and inquire more information at info@lunar.dev.

See Lunar.dev in Action

See Lunar.dev’s Agentic Gateway in action, combining AI Gateway and MCP Gateway for full governance over AI agents, tools and APIs. Discover how centralized management, observability and policy controls can scale across your entire organization.

.png)

.avif)